3D Animated Landscapes from 2D Images

A master's thesis from 2001 describes a wonderful way of animating Chinese landscape paintings and panoramas in order to create a three-dimensional walk through. Their method uses image based modeling and rendering (IBMR) and improves on a previous method called Tour in Picture (TIP). Their method is multi-perspective TIP and fixes many of the disadvantages of regular TIP. The main disadvantage of regular TIP was the short animation times. With multi-perspective TIP the animation times can be much larger. In fact, TIP animations were generally ten seconds in length but the animation on their research page is one minute and thirty seconds. (IBMR is not one of my areas of interest so I'm unable to summarize their method but they have very pretty results.)

I highly recommend you check out their video section. They really are able to create pseudo three-dimensional models of the scene that can be walked into (ie: they have depth) and through. Read More!

NPR Line Drawings Really Work!

In their study they had participants orient what are called gauges onto line drawings of 3D objects. Each guage is a disc with a line that represents its normal. Participants had to orient the normal of each gauge. A correct disc orientation would make it look like the disc was actually on the surface at its position. The position of each gauge was fixed and each gauge was initially superimposed over the model so as to not cue the participant. The gauges also did not interact with the models.

The study compares six different styles of rendering. Among these six styles were fully shaded images, apparent ridges, suggestive contours and an artists line drawing of the same object (the authors note that plain contours were rendered over all models other than the artist's image).

Their results are quite long and detailed and take up a majority of their paper. They show that shaded images are the best for depicting shape, however, that was an expected hypothesis and not the point of the experiment. In summary, their data shows that each line drawing method has its own strengths and weaknesses. Most were comparable and at times better than an artist's drawing. Some methods are good for some types of models while other methods are good for other types of models.

This is good news for NPR but I still want to see these methods developed into something that doesn't bog down processing and destroy interactive frame rates. Albeit, some methods achieve interactive frame rates (30-60fps, but sometimes they quote interactive frame rates as 5fps) these methods are still unsuitable for real-time environments such as games and walk-throughs that are not prerecorded. Read More!

Line Drawings (via Abstracted Shading)

When I implemented this, I did so in a ray tracer. This was terribly slow because of the algorithm (multiple passes - description follows) and because no ray tracing optimizations or optimized data structures were used.

Their algorithm first renders a tone image. The tone image is a gray-scale image of the scene that captures Phong diffuse lighting of the objects. This tone image is then blurred with a Gaussian kernel with a width equal to the width we want the lines to be rendered with. This is done for the same reason that you would blur an image before detecting blobs with a Laplacian filter. Finally, this blurred image is iterated over to find thin areas of dark shading. These areas are the ridges of the tone image.

The ridge detection routine described in the paper uses a local parametrization of a function at each pixel allowing for a pre-computation of the matrix used to find the function. The matrix arises from solving the system by least squares. In areas where the maximum principal curvature of the function exceeds a threshold a 'line' is output. Since this is all implemented in a fragment shader, the output is really a single dark pixel whose opacity is modulated based on distance from the principal axis of the function fit to the pixel. This allows for anti-aliasing of the lines.

By dynamically adjusting line width based on distance from the viewer the system allows for level of abstraction. Basically, as lines get further away they become thinner.

The final image is a combination of the output lines and a toon-shaded (either X-Toon or cel-shading) rendering of the scene. The algorithm normally extracts dark lines. Highlights are achieved if the tone image is changed to a specular tone image instead of a diffuse tone image and we invert the line color. My ray tracing system took so long to render because it would have to trace a diffuse tone image, a specular tone image, and a toon-shaded image of the scene before it could combine everything into the final result. A GPU implementation is much more conducive to multiple passes than a ray tracing approach.

Taking an image processing approach to the line drawing problem solved one major issue currently involved in rendering line drawings. It removes the need for curvature information (from the models themselves) which in itself is a huge boost in frame rate. However, I believe that until there are efficient object-space algorithms for extracting lines accurately from 3D objects no such line drawings will be accurate and efficient enough to run in real-time while mimicking line drawings by an artist. Read More!

NPAR 2009

What I wish I didn't have to miss this year is the talk from Ubisoft about Prince of Persia. For those of you that haven't played the new Prince of Persia all you need to know is it makes extensive use of NPR. Jean-François, the lead programmer on the new Prince of Persia, will be giving a talk that focuses on NPR while outlining the game's three year development cycle.

I hope they release a summary of the talk on ACM SIGGRAPH because I am tired of photorealism in games and want to see NPR take a hold. Besides that, NPR in games is one of my main interests. Read More!

Geometry Shader Silhouettes

There have been many attempts to extract silhouettes on the GPU through the use of vertex shaders, many of which require extra geometry to be needlessly rendered per edge or extra information sent to the GPU per vertex. With the advent of geometry shaders there have been a few attempts at creating new geometry on the fly whenever a silhouette is found to exist.

A recent paper that's being published by a group of students at a university in Venezuela describes a single pass geometry shader that effectively extracts silhouettes in real-time. The silhouettes are created as extrustions of edges that are detected as silhouettes and are properly textured. Their paper describes their algorithm and contains the following discussion (which I summarize in this post) as history and describes their solution.

Geometry shaders have access to adjacency information which makes them perfect for silhouette extraction. At first it seems like a great idea to just extrude edges whenever a silhouette edge is detected but this can arise in discontinuities in the silhouette where two edges meet at an angle.

Another problem with this approach is generating texture coordinates. Silhouettes are often stylized in non-photorealistic renderings to match the style of the rendering. Without a continuous silhouette it is not possible to generate texture coordinates that correctly tile and rotate a texture along the silhouette.

The method described in the paper solves both the issues of the discontinuities in the silhouette edges and of the generation of the texture coordinates with a slight performance hit over another recent geometry shader method. However, the accuracy their solution provides outweighs the slight performance hit.

The undergraduates that did the research will be presenting their solution at SIACG 2009. Read More!

X-Toon: The Extended Toon Shader

Traditional cartoon shading using a one-dimensional texture map is indexed with dot(norm, lightDir) where norm is the surface normal of the object being rendered at the current point and lightDir is the direction of the light from this point.

X-Toon extends this one-dimensional texture look up into two dimensions by using not only the previous dot product as an index but also a second parameter. The second parameter can be anything required to achieve the desired effect. In the paper they describe both an orientation based look up (based on the dot product between the normal and the view vectors) and a depth based look up. See their paper and website for details and results of the implementation.

The following is a video of the orientation and depth based effects created by the X-Toon shader. I created this application as a mini-project in a course at school using that same textures the original authors use. The first effect simulates varying opacity while the second simulates varying lighting based on orientation and view. The red texture on the car simulates backlighting, an effect normally faked by rendering a scene twice, rendered with one pass using the X-Toon texture mapping. The rest of the video shows depth-based effects and focuses on level of abstraction at far distances.

The best thing about this technique is that it's fast, simple, and new effects can easily be created by any artist. It's also an effective way to blend two different styles of rendering, which is an area of NPR I am currently looking into. Read More!

Gooch Shading and Sils

Gooch Shading is a lighting model for technical illustration created by Amy Gooch, Bruce Gooch, Peter Shirley, and Elaine Cohen. They observed that in certain technical illustrations objects were shaded with warm colors and cool colors to indicate direction of surface normals. As a side effect this also indicates depth because often as an object tapers away from us the surface normals begin to point away from us.

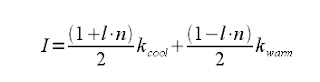

The Gooch lighting model modifies the classic Phong shading model to become

where l is the light direction and n is the normal of the surface at that point. Classically pure blue is chosen as the cool color and pure yellow is chosen as the warm color and these parameters are denoted b and y ( k_yellow = (y,y,0) and k_blue = (0,0,b) ). These two parameters control the strength of the temperature shift.

If this were the only contribution to the final output color we would see a gradual shift from yellow in areas of high illumination to blue in areas of low or no illumination. To fix this we can give the object a color denoted objColor. Two more parameters (alpha and beta) control the prominence of the object color and the strength of the luminance shift. alpha gives the prominence of the objColor at the warm temperature areas and beta gives the prominence of the objColor at the cool temperature areas.

Using this information k_warm = k_yellow + alpha * objColor and k_cool = k_blue + beta * objColor. These are then substituted into the first equation and the output color is calculated.

The shading alone gives the viewer a good indication of the overall shape of an object but the final image lacks definition. The creators fixed this by rendering the silhouettes of the object as well as using Gooch shading.

There are many methods of extracting silhouettes from an object but I will describe the one implemented in the demo video. The program in the demo video uses an object called an 'edge buffer'. The edge buffer is just a data structure that for each edge in a model stores whether that edge is part of a front facing face and/or if it's part of a back facing face. When rendering silhouettes we iterate through the edge buffer finding those edges that are on both front facing and back facing faces and render a silhouette line on that edge.

This method requires a little storage and a lot of preprocessing and is much more feasible in static scenes. It is equivalent to brute force in dynamic scenes.

Enjoy the demo. Reference